The use of image recognition on archaeological material is a very new research area, but other work has been done in this field, showing the potential usefulness of this application to other types of archaeological resources. This includes projects such as DADAISM, which focused on the identification of types of Palaeolithic stone tools (Power et al. 2017) and Arch-I-Scan, which is also working to create an easy-to-use, handheld interface for archaeological pottery recognition, but uses 3D scans rather than 2D images as the basis for its image recognition algorithms (Tyukin et al. 2018).

For ArchAIDE, two complementary machine-learning tools were developed to identify archaeological pottery. One method relied on the shape of a sherd's profile while the other was based on decorative features. For the shape-based identification tool, a novel deep learning methodology was employed, integrating shape information from points along the inner and outer surfaces. Fabric type is also an important tool for identification, but after considerable discussion it was deemed to be less promising and more problematic than shape and decoration-based tools. This was largely due to differences in lighting conditions found across thin-sections, and the difficulty in deriving a sufficient amount of training data.

The decoration-based classifier was based on relatively standard methods used in image recognition. In both cases, training the classifiers presented real-world challenges specific (although not limited) to archaeological data. This included a paucity of exemplars of pottery that were well identified, an extreme imbalance between the number of exemplars across different types, and the need to include rare types or minute distinguishing features within types. The scarcity of training data for archaeological pottery required multiple solutions, the first of which was to initiate large-scale photo campaigns to increase these data.

Multiple photo campaigns were conducted across the life of the project to produce a complete dataset of images for all the ceramic classes under study. The aim of the photo campaigns was to provide a sufficient number of images to train the algorithms for both the appearance-based (Majolica of Montelupo and Majolica from Barcelona) and the shape-based image recognition neural network (Roman amphorae, Terra Sigillata Italica, Hispanica and South Gaulish). ArchAIDE partners led the collection of photos, involving researchers both beyond the consortium and within their own photo campaigns. All partners worked with colleagues, institutions, museums and excavations to acquire the required number of samples. As not all types were stored in a single site, it was necessary to access multiple resources involving more than 30 different institutions in Italy, Spain, and Austria.

To train the shape-based neural network, it was necessary to take diagnostic photos of sherd profiles, so detailed guidelines were prepared for use by the consortium partners and project associates. Finding, classifying, photographing and creating digital storage for the necessary sherds was very time-consuming, as images of at least 10 different sherds for every type were needed to provide enough training information for the algorithm. The ArchAIDE archaeological partners had to verify that the selected sherds were identified correctly, which on occasion also helped institutions (such as museums, research centres, etc.) correct mistakes in the classification of their assemblages. The first approach was to search for at least 10 sherds for each of the 574 different types and sub-types identified in the reference catalogues. It became apparent, however, that not every top-level type and sub-type could be represented. In some instances this was because the type was rare, or because sherds of different types were mixed together when stored, and it was very difficult to locate them. This is a challenge across all forms of pottery studies, not just for a digital application like ArchAIDE. Partners reorganised the search to focus on top-level types, which reduced the number of processed types from 574 to 223.

Overall, 3498 sherds were photographed for training the shape-based recognition model (Figure 9). This included 1311 sherds of Roman amphorae representing 61 different types, including more than 10 sherds photographed for each type. The Terra Sigillata types included 2187 sherds, representing 54 'top level' types, including more than 10 sherds photographed for each type (many with more than 30 sherds).

For appearance-based recognition (Majolica of Montelupo and Majolica of Barcelona), using every image where decoration was visible, it was possible to collect photos originally taken for different purposes, e.g. graduate or PhD theses, archaeological excavations, etc. In these cases, photos were collected, classified, tagged and stored on the basis of the genres of decoration to which they belonged. A larger corpus of photos was collected through photo campaigns in Pisa (at the Superintendency warehouse), in Montelupo Fiorentino (at the local Museo della Ceramica) and in Barcelona (Archaeological Archive of the Museu d'Història de Barcelona and ceramic collection of the Museu del Disseny de Barcelona). In Montelupo Fiorentino, activities were conducted with the help of Francesco Cini, one of the ArchAIDE project associates, a professional archaeologist who collaborates with the Museum, together with a group of local volunteers who manage an archaeological warehouse that stores the largest collection of sherds of Majolica of Montelupo in the world. The final dataset included 10,036 items.

Figure 9: Video of ArchAIDE partners undertaking photo campaigns (43 seconds). Taken from ArchAIDE consortium (2019)

In the case of the Majolica from Barcelona, photo campaigns were attempted not only to create a dataset in order to train the algorithm, but to also create the first structured catalogue for this type of pottery. For this reason, in order to manage the work economically, the choice of assemblages was crucial. First, attention was focused on green and manganese ware, as there was a preliminary study in progress done by some members of the consortium on a large assemblage stored at the Archaeological Archive of the Museu d'Història de Barcelona. This may be the cornerstone for others to create a full catalogue that will include the other types of decoration for the Majolica pottery of Barcelona and their corresponding typologies. A total of 597 different vessels were documented generating a dataset of 3640 photos.

The creation of training data for both the shape and decoration-based image recognition classifiers (algorithms) was ongoing throughout the ArchAIDE project, but as the decoration-based work proceeded much more quickly, it became the main focus for solving the image-based search and retrieval workflow developed by the Deep Learning Lab at Tel Aviv University. As such, the discussion that follows concerns primarily the decoration-based work. As such, the discussion that follows concerns primarily the decoration-based work. Shape-based recognition will be discussed in more technical detail elsewhere (Itkin et al. 2019). When defining classes of pottery, the definition of a class does not follow a fixed structure; it may be defined by the use of specific colours or their combination, by the type of patterns, by the areas being embellished, and more. While this sort of variability in the set of defining features is not uncommon in other image classification systems, such as ImageNet (Deng et al. 2009), when combined with the limited number of images readily available for training purposes it poses challenges for training a new classifier.

Training classifiers for deep learning applications typically rely on very large datasets to find meaningful patterns within the data. The arts and humanities typically create datasets that are quite small and often heterogeneous in comparison, and the training data available for ArchAIDE was no exception. Training classifiers from a small dataset has often been tackled using transfer learning: performing the training on a somewhat similar domain, and then fine-tuning the last layers of the neural network (after features have been extracted) to perform inference for the given set of classes. To perform the classification, a classifier was therefore trained based on a Residual Neural Network, commonly known as a ResNet model (He et al. 2016) trained on ImageNet. Specifically, the variant chosen for the ArchAIDE network was the ResNet-101 network, as it had the best reported accuracy from all variants tested by He et al. (2016). The network operates on RGB (colour) images, which must be resized to 224 × 224 pixels in size in order to be fed into the network.

To utilise features at various scales and complexity, features from multiple levels of depth were relied upon in addition to the final feature vector of ResNet. ResNet-101 is composed of a sequence of five blocks, where the features emitted by the last block undergo a linear transformation to obtain the score ranking of the various classes for each input. This was solved based on concatenating the features from blocks 2-5 in order to obtain one large feature vector, representing features at various complexity levels and receptive fields.

As the output of each layer in ResNet represents the activation of a feature in a specific region of the image, to reduce the size of the feature activation vector while also obtaining more 'global' information on features without referring to their specific location in the image, the activation of each feature over the entire image was averaged ('average pooling'), to produce the final feature vector for our classification system.

To classify the vectors into classes, an SVM classifier was used. As SVM is typically used to separate vectors between two classes, in order to enable support for multiple classes, a One Versus Rest (OVR) technique - training one SVM classifier per class was used, to decide whether a feature vector belongs to a class or not. The classes were then ranked based on the confidence scores of their respective classifiers, to obtain an ordering between the different classes. It is important to note that as the features from different layers in the network are concatenated, their expected range of values is likely to change, therefore each feature is separately normalised to have a mean of 0 and a standard deviation of 1 over all the training dataset, before being used in the SVM classification process.

The pottery training images were typically rectangular, and so in order to fit them to the neural networks they were scaled to 224 pixels (along the long axis) and cropped to the middle to make them square. However, this meant omitting certain parts of wide sherds that failed to fit the central square of the image.

To train the network to work with varying amounts of decoration/background, the original image dataset was enriched by adding augmented versions of each image:

Thus from each image, 12 training images were created.

For appearance/decoration based classification, work was mainly carried out on the Majolica of Montelupo pottery. The collection of the dataset was led by the University of Pisa (UNIPI), using existing images (from archaeological excavations, PhD theses, etc.) and through multiple photography campaigns that had been carried out since 2016 (see Section 3.1). The classification of the images was based on that made by Berti (1997) and updated by Fornaciari (2016), with more than 80 decoration genres.

Most of the photos in the corpus were collected in dedicated photography campaigns in Pisa (at the Superintendency warehouse) and in Montelupo Fiorentino (at the local pottery museum). Despite the fact that the photo campaigns were carried out in a warehouse containing the largest collection of sherds of Majolica of Montelupo in the world, not every genre was represented by a high number of sherds. For 19 genres, fewer than 10 potsherd images were obtained, with six of them exhibiting only a single example.

The majority of the images were collected during the autumn of 2017, with more than 8000 sherds being photographed, covering 67 genres with more than 20 sherds, and many of them with more than 100 sherds. All the pictures have been classified by UNIPI. Finally, a social media campaign was launched to involve the world archaeological community, and obtain more images of Montelupo's Majolica from Portugal, Mexico, and Italy.

The methodology went through a comprehensive evaluation. A detailed test bed for the proposed appearance model was carried out by UNIPI, including about 400 pictures of sherds classified through a desktop application, and about 250 pictures of sherds taken and classified with mobile devices. The pictures captured with mobile devices were taken in different positions and varying amounts of environmental light. In this test the desktop version provided 42.5% top-1-accuracy and 77.9% top-5-accuracy, whereas the mobile version reported significantly lower accuracy: 33% for top-5, and only 12.8% for top-1.

Additional findings reported by the partners using the model indicated multiple factors that had varying effects on the classification results, including noticeable differences when altering the lighting conditions and/or background colours, and additional changes when using different cameras, or even between pairs of images that looked quite similar. This indicated a lack of stability in the classification system, which had to be addressed if it was to be used successfully in the field.

The evaluation results reported accuracy metrics that were significantly lower than the accuracy measures obtained on the test set during training, thus indicating a potential difference between the domain of the dataset images and the domain of images acquired in the field. Owing to the amount of effort already invested in the creation of the dataset, it was not possible to recapture the dataset images in 'more realistic' scenarios. Therefore, a solution was needed for retraining the model using the existing data.

Of all the factors that affect the classification results, altering the lighting had the most impact and therefore had to be addressed first. Looking at samples from the dataset, it was observed that most images were captured during the photo campaigns and under similar lighting conditions, whereas no colour augmentation was done throughout the training. The rationale for the lack of augmentation was the belief that features extracted from images, using the robust ResNet model, should already have been sufficiently resilient for these sorts of changes in the image.

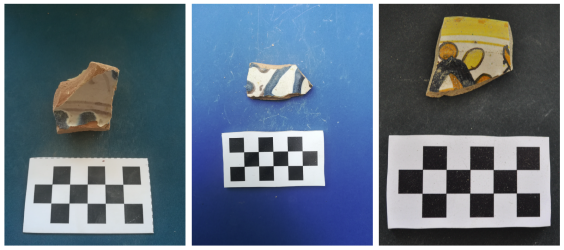

While going over the training dataset, a potentially larger cause for the failure of the classifier to generalise from the training set to images captured in the mobile app was observed (Figure 10). Within each photo campaign, most images were captured in very similar conditions, including similar backgrounds and similar ruler size/shape/position. This means the classifier may have learned features that are only relevant to the specific photo campaign (background and ruler) and used these in the classification process. This may explain the significant gap in the performance on images in the desktop app (images from the photo campaigns) and images in the mobile version (images captured in new conditions).

The solution to the first problem (lack of robustness to lighting/colour changes) was to add automatic augmentation, simulating different white balance results, and various brightness and contrast adjustments. This was applied during the generation of the training dataset by multiplying the luminosity ('brightness') of all the pixels within each image, using a randomised factor (between 0.8 and 1.2) per image, to simulate different lighting conditions. To compensate for different white balances, a similar random multiplicative factor was applied to each channel in the image; each of the Red/Green/Blue channels was multiplied by a separate random constant factor, in order to change the ratio between colours in the image.

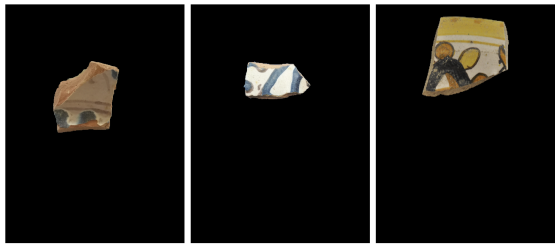

To solve the second problem (learning from details specific to a photo campaign), the ideal solution would have been to remove the image of the sherd from the background, to provide an isolated view of the relevant part in the picture (Figure 11). To automatically remove the foreground, the GrabCut algorithm for interactive foreground extraction was integrated (Rother et al. 2004) into the application used by the archaeologists. This enabled the users, with a few simple clicks, to reliably extract the foreground from most images.

While it was sufficient for images captured in the app, the training dataset contained thousands of images that had to be extracted from the background in order to fix the training process. To avoid the human labour required to perform segmentation manually, an automated solution was developed which benefits from the same phenomenon that originally hampered the training — the homogeneous capture process. The images, as can be seen in Figure 10, have a mostly uniform background, a potsherd and a ruler. For these images, a heuristic technique was devised to provide an automatic input for the GrabCut algorithm, instead of requiring manual input.

First, colour samples from the background were obtained, by sampling pixels around the border of the image, relying on the fact that both the ruler and the potsherd are centred in the image, and do not touch the image edges. Using the samples of background colour, the distance of each pixel in the image from the nearest background colour we sampled was measured. A threshold operation was then applied to obtain two large connected regions to correspond to the ruler and the potsherd. To make sure the image was indeed segmented correctly, it was necessary to verify whether one of these items is a ruler. As the rulers have a checkerboard pattern, corner detection algorithms were able to detect corners with strong activation scores at the corners of each square. To detect the corners, Harris corner detection was applied, with at least five corners needing to be present after dropping defections near the edges of the area. To distinguish between the ruler and the potsherd (which may exhibit details with 'corners'), a 'checkerboard error' was applied at the corners of the ruler, so each image patch should be roughly identical to its rotation in 180 degrees. By computing the geometric mean on the difference of the rotated patches around the corners, it was possible to rank how likely it was that each image patch had the checkerboard pattern, and was therefore the ruler. Finally, when the segmented area containing the potsherd was identified, GrabCut was applied to obtain a finer segmentation.

After obtaining the processed training dataset, which was much larger than the original due to the augmentations, the SVM classifier was replaced by a neural network. The network contained two dropout layers, which should make the model learn using a more robust representation, and decrease the chances of overfitting the training data. Furthermore, in order to boost the application performance (runtime), the 'heavy' ResNet-101 model, was replaced by a much lighter ResNet-50 model. The image recognition classifiers (algorithms) developed for ArchAIDE are also freely available on GitHub at https://github.com/barak-itkin/archaide-software.

Internet Archaeology is an open access journal based in the Department of Archaeology, University of York. Except where otherwise noted, content from this work may be used under the terms of the Creative Commons Attribution 3.0 (CC BY) Unported licence, which permits unrestricted use, distribution, and reproduction in any medium, provided that attribution to the author(s), the title of the work, the Internet Archaeology journal and the relevant URL/DOI are given.

Terms and Conditions | Legal Statements | Privacy Policy | Cookies Policy | Citing Internet Archaeology

Internet Archaeology content is preserved for the long term with the Archaeology Data Service. Help sustain and support open access publication by donating to our Open Access Archaeology Fund.